Discovery

Given the power to have AI create anything… what do you specifically ask for?

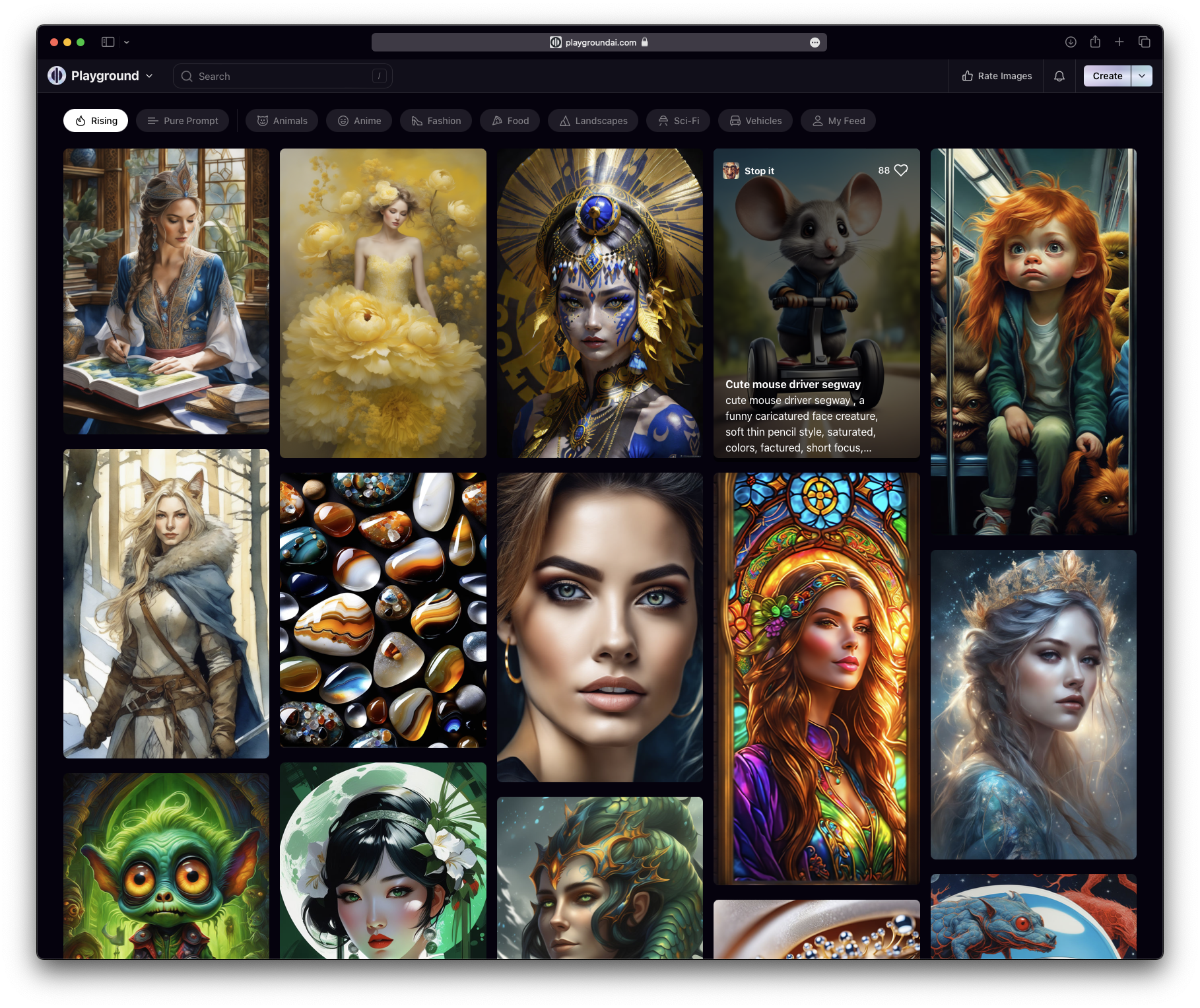

When exploring the latest AI–powered apps, I stumbled upon a fascinating solution to a classic design problem: When you have the power to instruct AI to create anything, what do you specifically ask for?

Since generative AI is inherently unpredictable, no two outputs will be identical if different seeds are used. As a result, achieving the desired outcome often involves a process of iteration, brimming with experimentation, due to either ambiguous instructions or the unpredictable nature of the results.

Take this for instance: I use Playground AI to conjure feature images for my articles. I aimed to produce an image of a semi–robotic cat licking its paw. It took an arduous 50 attempts before I was content with an image. Yet, despite my best efforts, the algorithm fell short of depicting the cat in the specific act of licking. And believe me, my early days experimenting with image-centric models were even more challenging.

So, from a product design perspective, how do you streamline a user’s learning curve? Expecting them to navigate the maze of trial and error might deter many. This might be why many AI–driven platforms, including Playground AI, incorporate features such as galleries, community feeds, remix options, and collaborative modes.

Observing other users’ approaches provides invaluable insights. You can mimic their prompts, tweak them to your preference, and hasten the learning process. While seasoned users might outgrow the need for such galleries, they serve as a pivotal guide for novices.

Clever.

Simulacra and Simulation

Researchers have released an open–source repository linked to their study, “Generative Agents: Interactive Simulacra of Human Behavior.” This repository houses the essential simulation module for generative agents—digital entities that mimic convincing human actions within their gaming universe. They head to jobs, share gossip, plan social events, befriend others, and even experience romance. Each agent boasts its own distinct personality and backstory.

In other news, OpenAI recently snapped up Global Illumination, the brains behind the widely–used open-source MMORPG, Biomes—think of it as a fusion of Minecraft and Roblox. Players can construct, gather, engage in mini–games, and more, directly from their web browsers. Considering researchers utilized ChatGPT under the surface, does this purchase hint at OpenAI’s belief in the future of AI–driven gaming?

I delved into this subject two weeks ago in Good Game. Everywhere I look, I see enthusiasts brainstorming potential applications. For example, there’s vast potential in AI composing music based on dialogue, identified emotional cues in text or voice, and even biological signals like heart rate when equipped with sensor–laden gear. Then there are generative chat systems where dialogue paths are created on–the–fly, entirely shaped by a character’s personality, memories, and surroundings. We’re still waiting to witness these concepts fully realized and mainstreamed, but the preliminary indicators are promising. After all, AI has always been intertwined with the gaming world.

Foundational models? Apps? Products?

Facebook is gearing up to release a large language model aimed at enabling developers to auto-generate code, putting it in direct competition with software from giants like OpenAI and Google. This new AI model from Meta, named Code Llama, is set to be open–source, with a launch possibly happening next week. Following Llama 2, this move is consistent with their approach of rolling out open–source models that are essentially available to everyone except competing tech giants.

Looking at the current trajectory, it’s clear that every major tech player will soon have its own language model. The list already includes OpenAI, Microsoft, Google, and Facebook, with Apple rumored to be next in line. Given Alexa and AWS, Amazon probably isn’t far behind either. It’s logical for Big Tech to invest in their own generative AI platforms—they have both the expertise and deep pockets required for training such models. The lingering question, however, is how will this shape up in terms of tangible products?

OpenAI’s ChatGPT was a game–changer, but it sometimes feels more like a tech demo than a polished product. While Google and Microsoft have launched their versions, Google's innovation in this space has been somewhat stagnant lately. While Amazon and Apple’s strategies remain under wraps, updates to Alexa and Siri are probably on the horizon. Yet, these feel predictable and, frankly, lackluster. There’s clearly demand—just look at how folks are jailbreaking their Google Nest Minis with the latest language models. But I expect these big companies to be even more innovative than hobbyists.

Microsoft seems to be a step ahead, having integrated conversational assistants into many of their platforms, like Bing, Windows, and Visual Studio Code. Being an avid user of GitHub Copilot, I’m keen to see what Meta's unveiling means for AI–driven programming. Consider Cursor, an AI-centric code editor. Here, AI isn’t just an add–on; it’s the core. If you're a fan of Visual Studio Code, Cursor will be familiar but supercharged with AI. The advantage is that products like Cursor can soon leverage open–source models over pricey proprietary ones, making them more cost–effective.

As highlighted in my piece, Generative Product–Market Fit, the real success won’t be in just building infrastructure, but in crafting standout products—and we’ve got no idea what those will be yet. Maybe Khoj, another Y Combinator-backed venture? (They're crafting an open-source, superhuman companion that seamlessly integrates with your digital psyche, simplifying the process to search and converse with all your personal data.) That remains to be seen.

Considering a startup idea or eager to deep dive into the under‐the‐radar gems we discuss here? Book a 1:1 call with me. Let’s discover the next big thing together—before it’s everywhere.

The future of tech, direct to your inbox

Discover the next generation. Subscribe for hand-picked startup intel that’ll put you ahead of the curve, straight from one founder to another.